In the world of data analysis, some datasets behave like stubborn rivers—flowing unevenly, meandering through unexpected paths, and occasionally flooding unpredictably. The Box-Cox Transformation acts as an expert engineer, designing a system of channels and sluices to tame these turbulent waters, ensuring the flow becomes stable and predictable. It is a mathematical tool that helps data behave—stabilising variance and bringing non-normal distributions closer to the smooth rhythm of normality.

The Uneven Terrain of Data

Imagine surveying a rugged landscape filled with sharp peaks and deep valleys. Raw data can look very similar—erratic and resistant to analysis. When variances differ widely across ranges, models like regression stumble, assumptions about normality break, and statistical inference loses power. Analysts often find themselves facing a landscape where prediction feels like guessing the next twist in a mountain road.

That’s where transformation enters as the great equaliser. Just as an artist adjusts lighting to bring balance to a photograph, data scientists use mathematical transformations to bring balance to data. Among them, the Box-Cox method stands tall for its elegance and adaptability—offering not just one, but an entire family of power transformations suited for different shapes of data.

A Family of Transformations, Not Just One

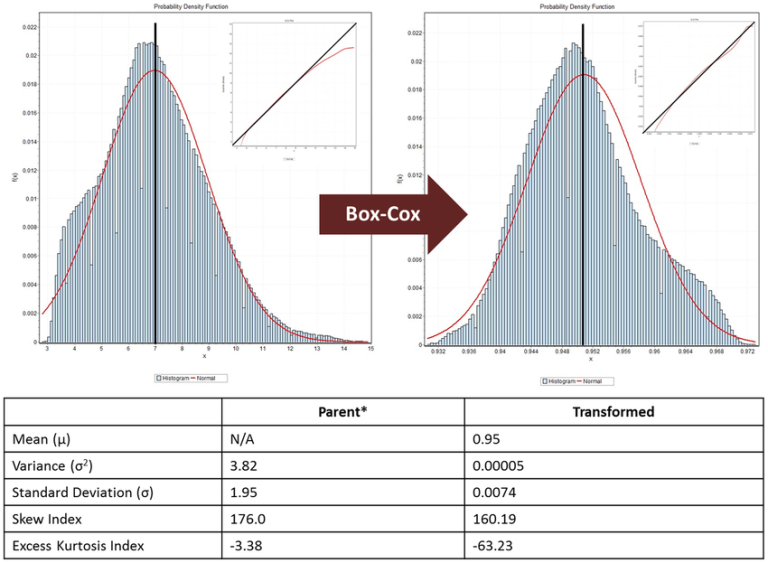

The Box-Cox Transformation isn’t a single formula but a flexible spectrum. It introduces a parameter, λ (lambda), that dictates how strongly the data should be transformed. When λ = 1, the data remains untouched; when λ = 0, the transformation becomes logarithmic; and between these extremes lies a continuum of subtle adjustments. This adaptability makes it a universal tool for stabilising variance across diverse datasets.

For learners stepping into advanced analytics through a Data Scientist course in Coimbatore, the Box-Cox approach serves as a window into the harmony between theory and application. It teaches that the goal of transformation is not to distort data but to reveal its underlying truth—to make patterns clearer, relationships stronger, and models more reliable.

Normality: The Hidden Symphony

Statistical methods like linear regression, ANOVA, and t-tests often whisper the same demand—“Let your errors be normal.” In real-world datasets, however, this harmony is rare. Some distributions are heavily skewed to the right (like incomes), while others tilt left (like test scores). These skews can cause models to misbehave, leading to misleading results or exaggerating specific effects.

The Box-Cox Transformation acts as a conductor, guiding the chaotic instruments of data toward a familiar rhythm. By applying the right power, it can pull a skewed distribution into balance—softening long tails, amplifying weak signals, and aligning data closer to the elegant curve of normality. This step is crucial before applying algorithms that assume homoscedasticity (equal variance) and normality of errors.

The Craft of Choosing λ

Selecting the right λ is part science, part art. Mathematically, one can find the λ that maximises the log-likelihood function—essentially identifying the transformation that makes the data “most normal.” In practice, analysts often use software to test a range of λ values and choose the one that best stabilises variance and symmetry.

Yet, there’s an intuitive beauty to this process. Like tuning a musical instrument, each adjustment of λ fine-tunes the resonance of data. Too much transformation, and the melody feels unnatural; too little, and the noise persists. The Box-Cox method, therefore, teaches restraint and sensitivity—skills that every aspiring analyst should cultivate through a Data Scientist course in Coimbatore, where hands-on experimentation complements theoretical insight.

Beyond Normality: Interpreting Results and Reversibility

One of the strengths of the Box-Cox Transformation is its reversibility. After building a model on transformed data, one can back-transform predictions to their original scale. This ensures interpretability—a cornerstone of trustworthy analytics. It’s like translating a poem into another language to understand its structure, then translating it back to appreciate its original rhythm.

Moreover, the transformation highlights a larger truth about data analysis: it’s less about forcing data into a shape and more about understanding its nature. By learning to manipulate scale without losing meaning, analysts develop a nuanced appreciation of how data behaves under different conditions—a skill as vital as any modelling technique.

Conclusion: The Gentle Power of Balance

The Box-Cox Transformation is a quiet yet powerful ally in the analyst’s toolkit. It doesn’t demand complex algorithms or vast computational power; it simply reshapes the data until it behaves predictably. Like a craftsman polishing a rough gemstone, the transformation reveals clarity where there was distortion.

In the evolving world of analytics, where data complexity grows every day, mastering such techniques remains essential. The Box-Cox method reminds us that before diving into deep learning or advanced models, the foundations of sound analysis—variance stabilisation, normality, and interpretability—must be strong.

Ultimately, it’s not just about taming unruly data, but about seeing its proper form—balanced, clear, and ready to tell its story.