Reinforcement learning (RL) is often explained as an agent learning to act by maximising a reward. The reward function is the “scorecard” that tells the agent what success looks like. In many real-world problems, however, writing a correct reward function is difficult. If the reward is poorly specified, the system may learn undesirable shortcuts that still score well. Inverse Reinforcement Learning (IRL) addresses this challenge by turning the problem around: instead of giving the agent a reward and asking it to learn a policy, you observe expert behaviour and infer the reward function that could have produced that behaviour. For learners exploring applied AI methods through a data scientist course in Chennai, IRL is an important concept because it connects machine learning with human decision-making in a practical way.

Why We Need Inverse Reinforcement Learning

Designing rewards sounds simple until you try to do it for complex environments. Consider autonomous driving. You might think the reward is “reach the destination quickly,” but that ignores safety, comfort, legal compliance, and social norms. If you add penalties for collisions and lane departures, the car might still drive aggressively to optimise time. If you penalise abrupt braking, it might avoid braking even when necessary. This is known as reward misspecification, and it is a common reason RL systems behave unexpectedly.

IRL is useful when:

- Experts can demonstrate good behaviour, but cannot easily express it as a precise reward.

- The task involves nuanced trade-offs, such as safety versus speed.

- We want systems that reflect human preferences and constraints.

In other words, IRL helps you learn “what the expert seems to value” by watching what they do, rather than forcing you to encode those values manually. This is one reason IRL frequently appears in advanced modules of a data scientist course in Chennai focused on modern decision intelligence.

How IRL Works at a High Level

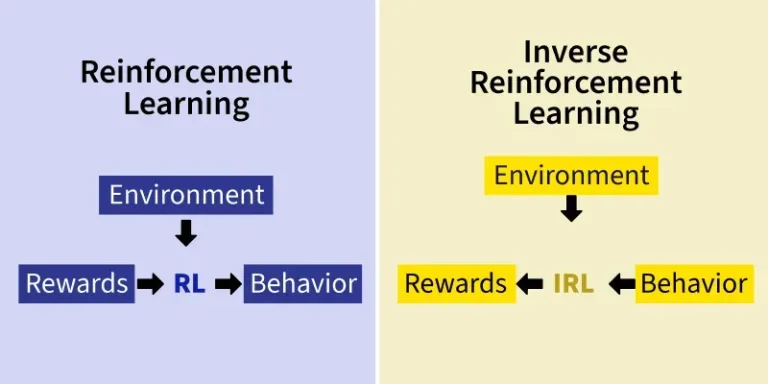

To understand IRL, it helps to contrast it with standard RL:

- RL: Reward function is known → learn the best policy.

- IRL: Policy demonstrations are known → infer the reward function.

An IRL pipeline typically includes the following steps:

- Collect demonstrations: You record expert trajectories. A trajectory is a sequence of states and actions, such as “car observed at position X, took action Y, moved to state Z.”

- Choose a reward representation: You define how rewards might depend on the environment. A common approach is to represent reward as a weighted combination of features. For instance, in driving, features may include distance to other vehicles, speed variance, and lane position.

- Infer the reward: You search for reward parameters that make the expert’s behaviour look optimal or near-optimal.

- Derive a policy: Once a reward is inferred, you can run RL to learn a policy that maximises that reward, ideally matching expert behaviour and generalising to new situations.

This process may sound straightforward, but the core problem is subtle: many different reward functions can explain the same behaviour. IRL methods deal with this ambiguity using assumptions and optimisation strategies.

Popular IRL Approaches and Their Intuition

Several families of IRL methods exist, each with a different guiding idea.

Feature matching and apprenticeship learning

One influential idea is to find a reward that makes the learner match the expert’s “feature expectations.” If experts tend to keep distance from obstacles, maintain stable speed, and avoid sharp turns, an inferred reward should encourage those outcomes. The learner tries to produce trajectories with similar statistics.

Maximum Entropy IRL

A widely used approach is Maximum Entropy IRL. The key intuition is: among all behaviours that fit the expert demonstrations, prefer the one that is least committed to unexplained details. In practice, it assigns higher probability to trajectories with higher reward, while still allowing variability. This makes the model more robust when expert demonstrations are imperfect or noisy.

Bayesian IRL

Bayesian methods treat the reward function as uncertain and represent it as a probability distribution. Instead of producing one “best” reward, the method produces a set of plausible rewards, weighted by how well they explain the demonstrations. This is useful in high-stakes settings because it allows you to reason about uncertainty.

If you are enrolled in a data scientist course in Chennai, these approaches provide a valuable lens on how optimisation, probability, and behavioural modelling come together in real AI systems.

Real-World Applications of IRL

IRL is used when we care about aligning automated decisions with expert judgement or human preferences.

- Robotics: Robots can learn tasks like object handover, cleaning strategies, or safe navigation by watching humans perform them.

- Autonomous driving: Systems can infer comfort and safety preferences from skilled drivers, capturing behaviours that are hard to encode as rules.

- Healthcare operations: IRL can help model clinician decision patterns in scheduling, triage, or treatment planning, provided ethical and privacy safeguards are followed.

- Recommendation and personalisation: While not always framed as IRL, similar ideas can infer what users value from observed choices, then optimise experiences accordingly.

In each case, the promise is the same: learn the objective from behaviour, then optimise decisions using that objective.

Practical Challenges and Best Practices

IRL is powerful, but it has pitfalls that practitioners should anticipate.

- Ambiguity of rewards: Multiple rewards can explain the same demonstrations. Regularisation, maximum entropy principles, and Bayesian approaches help manage this.

- Quality of demonstrations: If the expert behaviour is inconsistent, biased, or context-dependent, the inferred reward may inherit those issues.

- Generalisation limits: The reward learned from one environment may not transfer to another unless the feature representation captures the right invariants.

- Safety and alignment: Inferred rewards must be tested carefully to ensure the learned policy behaves safely in edge cases.

Good IRL projects invest heavily in defining meaningful features, collecting representative demonstrations, and validating behaviour under stress tests.

Conclusion

Inverse Reinforcement Learning tackles a core difficulty in decision-making systems: specifying the right reward. By inferring rewards from expert demonstrations, IRL offers a way to capture nuanced preferences and constraints that are hard to write down explicitly. It is particularly relevant in robotics, autonomous systems, and complex operational decisions where behaviour reflects unspoken trade-offs. For professionals strengthening applied AI skills through a data scientist course in Chennai, IRL is a valuable topic because it teaches you how to move from observed behaviour to a formal objective—one of the most practical bridges between human expertise and machine optimisation.